Master thesis projects

Detecting Personality during Group Interactions from Multimodal Behaviors

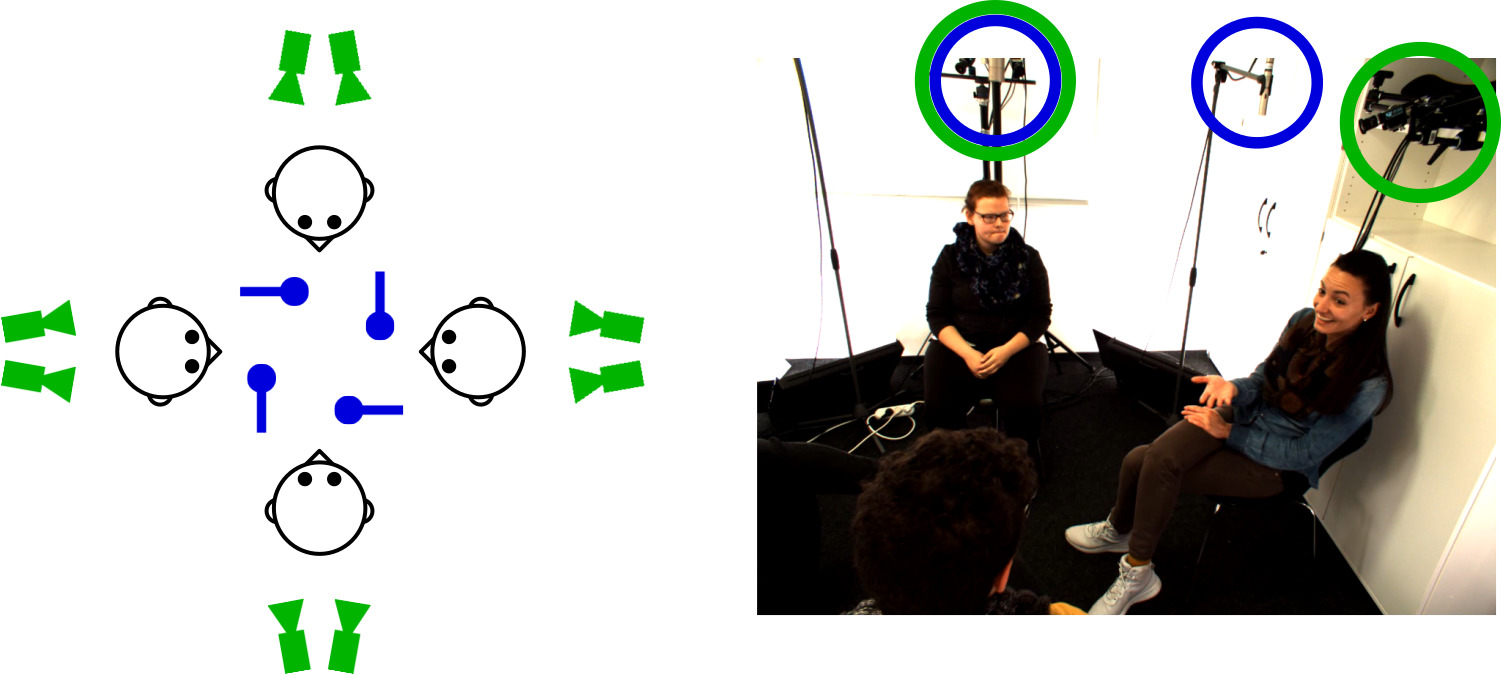

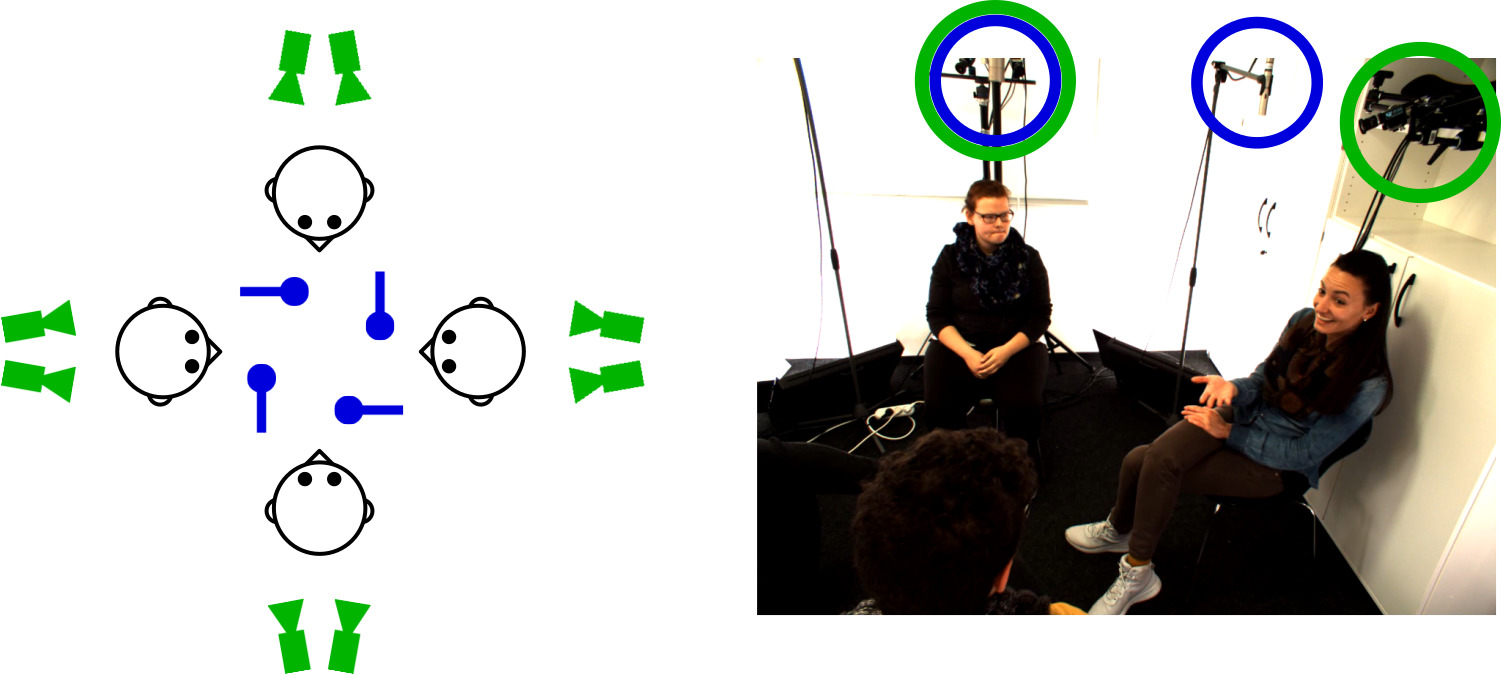

Description: This project is to detect personality from multimodal behavioural data within small groups. You will use a dataset (as shown in the figure) that recorded videos when 3 or 4 participants had a group discussion (Müller et al. 2018). Participants also reported their personality by completing a Big Five personality questionnaire. Speech, body movement, posture, eye contact and facial expression can be extracted from the video to predict a participant’s personality via machine learning or deep learning.

Supervisor: Guanhua Zhang

Distribution: 20% Literature, 20% Data Preparation, 40% Implementation, 20% Data Analysis

Requirements: Strong programming skills, experience with machine learning/deep learning and data analysis

Literature: Philipp Müller, Michael Xuelin Huang, and Andreas Bulling. 2018. Detecting Low Rapport During Natural Interactions in Small Groups from Non-Verbal Behaviour. Proceedings of the 23rd International Conference on Intelligent User Interfaces (IUI).

Personality Recognition under Data Scarcity

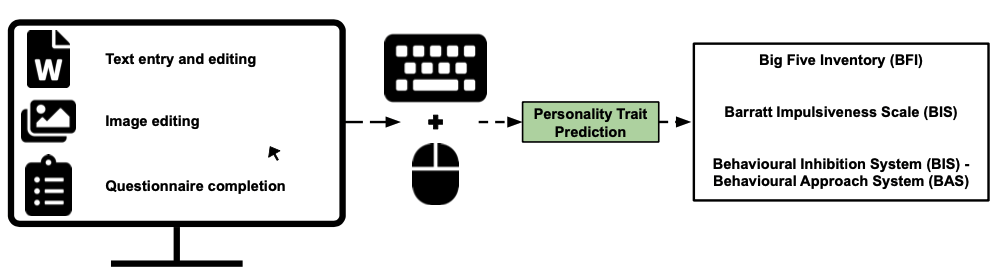

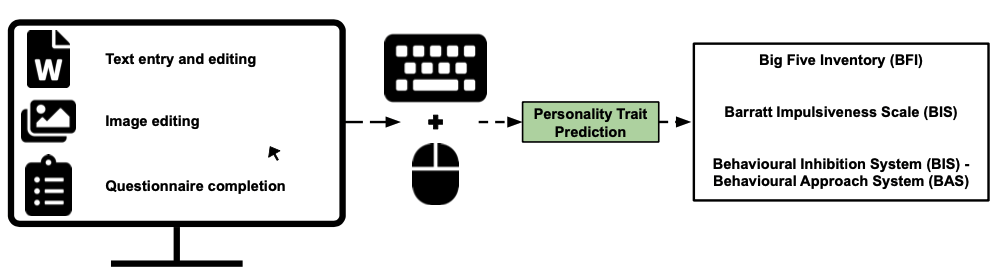

Description: Personality is stable, which means no matter how much data we collect from a person, he or she always has only one corresponding score for a personality trait that will be the ground-truth label in recognition. For example, we may collect data for hours from a user, but all the samples map to only one value. This may lead to a data scarcity problem, i.e., training a traditional recognition model, especially a regression model, requires the dataset to cover a wide range of score values. In this case, hundreds or even thousands of participants who have different personality scores have to be recruited, increasing the difficulty of data collection and personality research. Therefore, this project will try to handle the problem via data science and machine learning techniques, e.g., data augmentation, embedding, representation learning, unsupervised learning, etc., to effectively predict personality from a small amount of data. Several personality datasets, like AMIGOS (Miranda-Correa et al. 2018) shown in the figure, can be used to test and evaluate your approaches.

Supervisor: Guanhua Zhang

Distribution: 20% Literature, 10% Data Preparation, 50% Implementation, 20% Data Analysis

Requirements: Strong programming skills, experience with machine learning and data analysis

Literature: Juan Abdon Miranda-Correa, Motjaba Khomami Abadi, Nicu Sebe, and Ioannis Patras. 2018. AMIGOS: A dataset for affect, personality and mood research on individuals and groups. IEEE Transactions on Affective Computing.

Mental Face Reconstruction by Interactive Morphing

Description: Mental image reconstruction is an interesting and challenging task with the goal of generating an image of something that only resides in a user's mind. It is used in various fields, most commonly in computer criminology, where it is used to reconstruct the face of a criminal from the memory of an eyewitness. The goal of this project is to build an interactive system that is able to reconstruct faces the user is thinking of based on their eye gaze behavior. The idea is to present multiple faces to a user while their eyes are being tracked. The gaze data is then used to morph between the facial areas of the different faces. This is repeated iteratively until the system converges or the user is satisfied. This method should be compared against the current state of the art in a user study.

Supervisor: Florian Strohm

Distribution: 20% Literature, 10% Data Preparation, 40% Implementation, 30% Analysis and Evaluation

Requirements: Interest in computer vision and eye-tracking

Neuroscience Inspired Computer Vision

Description: Artificial computational systems, leveraging physiological data, rival human performance in certain tasks. However, there is still a large gap between human and machine perception and understanding and thus motivation to bridge the gap.

On one hand, machines require large amounts of training data, generally trained on closed-world settings, and therefore there is a lack of out-of-domain generalization. Alternatively, humans can learn complex visual object categories from a fleeting number of examples. Models, such as ImageNet challenge winner (Krishevsky et al., 2012) trained on 1 million examples, is similar to the number of visual fixations a human makes in a year (approx. three saccades per second, when awake).

The nature of representations in biological systems compared to deep neural networks is quantitatively and qualitatively different. There is much more that neuro-science can teach deep learning! Thus, we propose to train a mapping of physiological feature vectors (i.e cortical activity) to DNN feature vectors, where the goal is to disentangle the information content.

In the scope of this project, the student will work on the task of image reconstruction using fMRI data. The student first re-implements the model from Beliy et al. 2019 as the baseline model. Then, they will experiment with using versus not using pre-trained networks, extracting features at different stages, etc. Subsequently, the student will implement their own novel system, such as using the curiosity driven reinforcement learning approach (Pathak et al. 2017) with episodic memory. The idea is to further understand what kind of information content is held within the fMRI data. Therefore, students will implement the model to reconstruct images using fMRI data, as well as exploring methods to incorporate additional physiological data (such as EEG or eye tracking data).

Supervisor: Ekta Sood and Florian Strohm

Distribution: 20% Literature, 15% Data Processing, 40% Implementation + Experiments, 25% Data Analysis and Evaluation

Requirements: Interest in computer vision and cognitive modeling, familiarity with data processing and analysis/statistics, experience with machine learning and it is helpful to have exposure to at least one of following frameworks — Tensorflow/PyTorch/Keras.

Literature: Roman Beliy, Guy Gaziv, Assaf Hoogi, Francesca Strappini, Tal Golan, and Michal Irani. 2019. From voxels to pixels and back: Self-supervision in natural image reconstruction from fMRI. Advances in Neural Information Processing Systems (NeurIPS).

Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. 2012. Imagenet classification with deep convolutional neural networks. Advances in Neural Information Processing Systems (NeurIPS), volume 25.

Deepak Pathak, Pulkit Agrawal, Alexei A. Efros, and Trevor Darrell. 2017. Curiosity-driven exploration by self-supervised prediction. Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPR).

Reasoning about Intermodal Correspondences — Aligned Representation Learning with Human Attention

Description: Vision and language are two instrumental modalities in which humans obtain knowledge and conceptualize the world. Recent machine learning approaches have aimed to combine these modalities in a variety of tasks in order to leverage the human ability of learning from our rich perceptual environment — multimodal knowledge acquisition from our interactive environment.

A method by Izadinia et al. (2015) combines these two modalities by their proposed ”Segment-Phrase Table” (SPT), for the task of semantic segmentation. The SPT is a trainable sized curation of one to one correspondences between each element of a given set (there are two sets — image segments and text phrases); this means they created bijective associations between their image segments and textual phrases for said segments in a given image. For example, if the image depicts a horse jumping over a log, they generate (semi-supervised) textual phrases for segments of that image — these segments are organized in a parse tree like nature, such that hierarchy of the features in each segment are taken into account. The goal of their work was to cluster, localize and segment instances by reasoning about commonalities (Izadinia et al., 2015). Ultimately, the authors showed SOTA results over benchmark datasets in the task of semantic segmentation and perhaps more importantly supplied their model with richer semantic understanding such that the phrases acted as a catalyst for enhanced contextual reasoning.

To that end, Aytar et al. (2017) explored leveraging various aligned visual, textual and audio corpora, in order to implement their neural network which learned the aligned representations of the three introduced modalities. Specifically, they trained with pairs of either images and sentences or images and audio (extracted from videos), however their network could also learn aligned representations between corresponding text and audio (though was never trained to learn these alignments). The method of evaluation of these aligned representations was a cross-modal retrieval task: given a query, with only one of the three inputs (for example an image), they evaluated the accuracy of retrieval for the corresponding aligned pair from one of the other modalities (for example the corresponding audio). The results indicate that cross modal aligned representations improved performance on retrieval and classification tasks. In addition, their network was able to learn representations between audio and text without being explicitly trained with said alignments — supporting the notion that the images perhaps bridged the gap between these other two modalities.

Given previous work, this project aims to further implement and reason about these intermodal correspondences. For a given task, I propose implementing an existing SOTA semantic segmentation model (from a paper or github repository) (the task is not a hard constraint), which incorporates the SPT proposed by Izadinia et al. (2015). In addition to the alignment between text phrases and image segments, the task would be to introduce and train the network with a third modality, that being gaze data (corresponding gaze data on images can be found for various benchmark datasets, such as MScoco or SUN). The objective is to create a composition of three bijective functions — align three modalities for a high level task. The research question is, can we translate/bridge information across these three modalities. To that end, how does gaze information enhance a task such as semantic segmentation, does it provide the network with even more semantic context — for example, using the SPT we can evaluate for cross-modal semantic similarity. Additionally, as with the work proposed from Aytar et al. (2017), though this network would never be trained with text and gaze pairs, the aim would be to investigate if the network can learn representations between text and gaze by using the images to bridge the gap.

Supervisor: Ekta Sood

Distribution: 20% Literature, 20% Data Collection, 40% Implementation, 20% Data Analysis and Evaluation.

Requirements: Interest in multimodal machine learning, familiarity with data processing and analysis/statistics, experience with Tensorflow/PyTorch/Keras.

Literature: Yusuf Aytar, Carl Vondrick, and Antonio Torralba. 2017. See, hear, and read: Deep aligned representations. arXiv:1706.00932. Retrieved from https://arxiv.org/abs/1706.00932

Hamid Izadinia, Fereshteh Sadeghi, Santosh Kumar Divvala, Yejin Choi, and Ali Farhadi. 2015. Segment-phrase table for semantic segmentation, visual entailment and paraphrasing. Proceedings of the 2015 International Conference on Computer Vision (ICCV).

Interpreting Attention-based Visual Question Answering Models with Multimodal Human Visual Attention

Description: In visual question answering (VQA) the task for the network is to answer questions about a given image and a natural language (Agrawal et al. 2015). VQA is a machine comprehension task and as such allows researchers to test if models are able to learn reasoning capabilities between various modalities. It is a field of interest for researchers from various backgrounds as it intertwines fields of computer vision and natural language processing. Inspired by human visual attention, in recent years many models incorporate various neural attention algorithms. Attention mechanisms supply the network with the ability to focus on a particular instance/element of the input sequence. Subsequently, attention-based networks often yield better results. However, in recent years, performance is not just the main focus. In addition to high performing models, researchers (Das et al. 2016, Sood et al. 2021) are also interested in interpreting neural attention and bridging the gap between neural attention and human visual attention.

To that end, in this project the student will interpret the learned multimodal attention of off-the-shelf VQA models which have performed with SOTA results. The student will extend the work of Sood et al. 2021, by using the same approach for evaluating human versus machine multimodal attention, on additional SOTA attentive VQA models with a focus on the current high performing transformer-based networks.

Supervisor: Ekta Sood

Distribution: 20% Literature, 10% Data Collection, 30% Implementation, 40% Data Analysis and Evaluation.

Requirements: Interest in attention based neural networks, cognitive science, and multimodal representation learning, experience with data analysis/statistics, familiarity with machine learning and exposure to at least one the following frameworks — Tensorflow/PyTorch/Keras.

Literature: Aishwarya Agrawal, Stanislaw Antol, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, and Devi Parikh. 2015. VQA: Visual question answering. arxiv:1505.00468. Retrieved from https://arxiv.org/abs/1505.00468

Abhishek Das, Harsh Agrawal, C. Lawrence Zitnick, Devi Parikh, and Dhruv Batra. 2016. Human attention in visual question answering: Do humans and deep networks look at the same regions? arxiv:1606.03556. Retrieved from https://arxiv.org/abs/1606.03556

Ekta Sood, Fabian Kögel, Florian Strohm, Prajit Dhar, and Andreas Bulling. 2021. VQA-MHUG: A gaze dataset to study multimodal neural attention in VQA. Proceedings of the 2021 ACL SIGNLL Conference on Computational Natural Language Learning (CoNLL).

Interpreting Neural Attention in NLP with Human Visual Attention

Description: Recent work has explored integrated gaze data into attention mechanisms for various computer vision and NLP tasks. However, it is still unknown which types of model architectures and for which tasks this human-like attention component is actually helpful.

In addition, recent work in our department has uncovered that the attention weights computed in the pre-trained transformer network, XLNet, does not actually correlate with the human visual attention, yet the model outperforms previous methods on a question answering task.

We then ask the question, do all transformer networks outperform more traditional models such as LSTMs while also having the least similarity to human visual attention? Is there an impact on the language model methods of various pre-trained networks in NLP or is this significant variance to human attention a bi-product of the pre-trained nature of these systems? Do transformer networks used in computer vision tasks also divert from human attention?

In the scope of this project, the student will implement the BeRT transformer network and the GPT-2 transformer network for the task of question answering on a popular benchmark dataset. The student will extend our previous interpretability paper (Sood et al. 2020) by comparing human visual attention to pre-trained transformer network attention on the same reading comprehension QA task — particularly experimenting with extracting attention at various layers and comparing temporal changes to divergence to human attention as the network trains. As the next step, the student will implement their own transformer network, which is not pre-trained, and perform the same analysis.

Supervisor: Ekta Sood

Distribution: 20% Literature, 25% Data processing, 30% Implementation and Experiments, 25% Analysis and Evaluation

Requirements: Interest in machine reading comprehension/question answering tasks, human visual attention, explainability/neural interpretability, and NLP. In addition, experience with machine learning, data processing and statistics. It will be helpful for the student to have experience with Tensorflow and Pytorch.

Literature: Ekta Sood, Simon Tannert, Diego Frassinelli, Andreas Bulling, and Ngoc Thang Vu. 2020. Interpreting attention models with human visual attention in machine reading comprehension. Proceedings of the 2020 ACL SIGNLL Conference on Computational Natural Language Learning (CoNLL).

Consciousness in Encoded States

Description: Bengio 2017 states that our consciousness can be defined as awareness (or attentiveness) of a small amount of high-level and abstract features which are deemed relevant to the task in question. The research question is: can this method of using encoded states instead of fine-tuning (or both), be more generalizable between tasks? If so, we might be able to argue for modeling some form of consciousness or perhaps better to be inspired from Bengio 2017, in which authors postulate this would be some form of consciousness.

The idea here would be to better understand the encoded state of pre-trained language models. Reading over this paper (Bengio 2017), authors suggest that a form of consciousness can be seen as “the sense of awareness or attention rather than qualia”. In the context of this project, the student will implement the pre-trained BeRT model (encoder) for three NLP tasks (QA, Paraphrasing, Summarization) [for this the student will have to implement a data augmentation method to obtain parallel corpora for the tasks] — these are the baseline models. Then the student will need to swap the encoded state of the models for the different tasks, but will not fine-tune (the student will not continue to train for the alternative/different task). At this point, the student will perform analysis in order to understand if the model performs well when swapping the encoded state, to see if the attention maps look different, and to interpret the loss landscape visualization (compared to the baseline models). Next the student will implement a method to regularize the encoded states between tasks (instead of fine-tuning, like which was done for the baseline). The student will evaluate model performance when swapping the encoded state, and repeat qualitative analysis as described above. Lastly, the student will both regularize the encoded state and also fine-tune and then evaluate the model to compare to the previous experimental results.

Supervisor: Ekta Sood

Distribution: 20% Literature, 20% Data Collection, 30% Implementation, 30% Analysis

Requirements: Interest in NLP, cognitive modeling, neural interpretability and generalizability. Familiar with data processing and data augmentation methods, some machine learning experience, and it is helpful to have exposure to the following framework – Tensorflow, Pytorch or Keras.

Literature: Yoshua Bengio. 2017. The consciousness prior. arXiv:1709.08568. Retrieved from https://arxiv.org/abs/1709.08568

Emotions with Gaze

Description: Humans express emotions via various modalities, such as with facial expressions, vocality, and gestural prompts. Surface forms of emotion expression have been researched and observed on social media platforms (via natural language processing techniques), through speech analysis (within the domain of digital phonetics and signal processing), facial cues (within the domain of computer vision), etc., where all domains of research are integrating aspects of cognitive science for their particular tasks.

The notion behind this project would be to find a relationship between gaze and affect expression, as well as speech, leveraging gaze information in order to predict emotion classes. The project would be to use two existing multimodal datasets in which annotations of facial landmarks, speech data, and emotion labels are present. Then the student would use a tool, such as Openface: A general-purpose face recognition library with mobile applications (Amos et al., 2016), for gaze estimation to investigate/analyze the temporal relationship between gaze, emotion, and speech. The student will explore joint representations/multimodal embeddings of speech, gaze, facial features, etc. The goal would be to use a machine learning approach — to build a classifier in order to predict various emotions using facial expressions, gaze information, and speech. Then they would test the model on the subset of videos unseen during training (perhaps also evaluate their model(s) with adversarial attacks via “fake emotional” videos, i.e movies, etc). The project is about exploring various human joint multimodal representations in human emotion expression — various modalities coupled with affect expression: gaze, facial expressions, and speech.

Supervisor: Ekta Sood

Distribution: 20% Literature, 30% Data Collection, 30% Implementation, 20% Data Analysis and Evaluation.

Requirements: Interest in affective computing, emotion analysis, and multimodal machine learning approaches, familiar with data processing and analysis/statistics, experience with machine learning and the following frameworks — Tensorflow/PyTorch/Keras.

Literature: Brandon Amos, Bartosz Ludwiczuk, and Mahadev Satyanarayanan. 2016. Openface: A general-purpose face recognition library with mobile applications. CMU School of Computer Science, Technical Report CMU-CS-16-118.

Predicting Neurological Deficits with Gaze

Description: Gaze patterns in individuals with Autism Spectrum Disorder (ASD) have been heavily researched as atypical eye gaze movements are part of the diagnosis signs. For example, Yaneva et al. (2015) built an ASD corpus extracting gaze data while individuals with ASD performed web-related tasks; the focus on this research was to enhance web accessibility for people with ASD and to work towards ASD detection leveraging gaze information. Regneri et al. (2016) leveraged gaze data for discourse analysis on individuals with and without ASD, their main objective being to evaluate text cohesion between varied groups. Leveraging information from gaze patterns has assisted researchers in further understanding the underlying aspects of language comprehension and production for individuals with neurological deficits such as ASD.

In this project, we aim to utilize machine learning approaches to build on such previous work from psychologists and neuroscientists. Due to the high variability and lack of resources in such a domain, the main objective is to continue building the path towards assistive interactive technologies for individuals with ASD. The task is to use gaze data to classify ASD; the research question is, can we classify individuals generally according to their gaze patterns. As eye tracking data can be sparse and labor intensive to retrieve, particularly for novel groups, we propose to extract the data using OpenFace (Amos et al., 2016). The data can be crawled from, for example, Youtube videos of people with ASD, specifically extracting varied group ages such that we can bin subtypes. The goal here would be to classify ASD with gaze information using a ML approach, and then to further conduct in-depth analysis on variations of gaze patterns specific to variables such as age.

Supervisor: Ekta Sood

Distribution: 20% Literature, 30% Data Collection, 30% Implementation, 20% Data Analysis and Evaluation.

Requirements: Interest in cognitive science (particularly with the human visual perception) and assistive technologies, familiarity with data processing and analysis/statistics, experience with machine learning. It would also be helpful to have exposure to the following frameworks — Tensorflow/PyTorch/Keras.

Literature: Brandon Amos, Bartosz Ludwiczuk, and Mahadev Satyanarayanan. 2016. Openface: A general-purpose face recognition library with mobile applications. CMU School of Computer Science, Technical Report CMU-CS-16-118.

Michaela Regneri and Diane King. 2016. Automated discourse analysis of narrations by adolescents with autistic spectrum disorder. Proceedings of the 7th Workshop on Cognitive Aspects of Computational Language Learning.

Victoria Yaneva, Irina Temnikova, and Ruslan Mitkov. 2015. Accessible texts for autism: An eye-tracking study. Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility (ASSETS).

Image Synthesis for Appearance-based Gaze Estimation

Description: Appearance-based gaze estimation (Zhang et al. 2015) is a task in computer vision with the goal of predicting either the 3D gaze direction or the 2D point of regard. With recent advancements in machine learning and, in particular deep learning, recent methods have achieved state of the art performance for this task. However, such methods require large-scale labelled datasets which are difficult to collect. A promising approach is to rely on methods that can synthesise eye or face images (He at al. 2019, Yu & Odobez 2020, Zheng et al., 2020) and use these images as a data augmentation technique.

The goal of this project is to first evaluate existing methods and then propose a novel, improved method to synthesise images. Image source: He et al. 2019.

Supervisor: Mihai Bâce

Distribution: 30% Literature, 10% Data Preparation, 40% Implementation, 20% Analysis and Evaluation

Requirements: Computer Vision. Experience with Tensorflow/PyTorch

Literature: Zhang, Xucong, Yusuke Sugano, Mario Fritz and Andreas Bulling. 2015. Appearance-based gaze estimation in the wild. Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

He, Zhe, Spurr, Adrian, Zhang, Xucong, & Hilliges, Otmar. 2019. Photo-Realistic Monocular Gaze Redirection Using Generative Adversarial Networks. Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV).

Yu, Yuechen and Jean-Marc Odobez. 2020. Unsupervised Representation Learning for Gaze Estimation. Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Zheng, Yufeng, Seonwook Park, Xucong Zhang, Shalini De Mello and Otmar Hilliges. 2020. Self-Learning Transformations for Improving Gaze and Head Redirection. arxiv:2010.12307. Retrieved from https://arxiv.org/abs/2010.12307.

Multi-duration Saliency Prediction for Mobile User Interfaces

Description: Saliency maps are a common methodology to visualise and estimate the users’ visual attention on a number of visual stimuli (e.g. images, videos, user interfaces, etc.). Traditionally, such saliency maps are obtained either with special-purpose eye tracking equipment or with regular commodity cameras that track the users’ gaze. However, cameras may not always be available. Recent works have proposed computational models of attention, i.e. methods that estimate the users’ attention directly from the stimuli. Yet these methods do not capture the temporal dynamics of gaze behaviour.

In this work, we will investigate prior works that proposed multi-duration saliency maps, i.e. saliency maps that capture different viewing durations, for natural images (Fosco et al. 2020) and extend this concept to mobile user interfaces. For evaluations, we will rely on existing datasets (e.g. the Mobile UI Saliency dataset (Leiva et al. 2020)) Image source: Leiva et al., 2020.

Supervisor: Mihai Bâce

Distribution: 30% Literature, 10% Data Preparation, 30% Implementation, 30% Analysis and Evaluation

Requirements: Experience with Tensorflow/PyTorch

Literature: Fosco, Camilo Luciano, Anelise Newman, Pat Sukhum, Yun Bin Zhang, Nanxuan Zhao, Aude Oliva, and Zoya Bylinskii. 2020. How much time do you have? Modeling multi-duration saliency. Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

Leiva, Luis A., Yunfei Xue, Avya Bansal, Hamed Tavakoli, Tuðçe Köroðlu, Jingzhou Du, Niraj Dayama and Antti Oulasvirta. 2020. Understanding visual saliency in mobile user interfaces. Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services.

Visual Scanpath Prediction for Mobile User Interfaces

Description: In contrast to modelling visual attention through saliency, which generally only provides a single 2D distribution of visual attention over a stimuli, scanpaths can fully capture the temporal dynamics of gaze behaviour and provide a temporal ordering on human fixations. Consequently, methods to predict scanpaths (Bao & Chen 2020, Assens et al. 2018), i.e. to generate plausible and human-like sequences of fixations on new visual stimuli has many practical applications in human-computer interaction and beyond. However, there are currently no methods to predict scanpaths on mobile user interfaces (UIs).

In this project, we will explore and propose new methods for scanpath prediction on mobile UIs. We will first evaluate existing methods that predict scanpaths on natural images and then investigate ways to improve and design a method that is specifically tailored for this use case. For evaluations, we will use the Mobile UI Saliency dataset (Leiva et al. 2020).

Supervisor: Mihai Bâce

Distribution: 30% Literature, 10% Data Preparation, 30% Implementation, 30% Analysis and Evaluation

Requirements: Experience with Tensorflow/PyTorch

Literature: Bao, Wentao and Zhenzhong Chen. 2020. Human scanpath prediction based on deep convolutional saccadic model. Neurocomputing 404.

Assens, Marc, Xavier Giro-i-Nieto, Kevin McGuinness and N. O'Connor. 2018. PathGAN: Visual Scanpath Prediction with Generative Adversarial Networks. arxiv:1809.00567. Retrieved from https://arxiv.org/abs/1809.00567

Leiva, Luis A., Yunfei Xue, Avya Bansal, Hamed Tavakoli, Tuðçe Köroðlu, Jingzhou Du, Niraj Dayama and Antti Oulasvirta. 2020. Understanding visual saliency in mobile user interfaces. Proceedings of the 22nd International Conference on Human-Computer Interaction with Mobile Devices and Services.

AI-assisted Semi-automatic Labelling of Eye Contact Data

Description: With recent advancements in sensing and, in particular, camera technology, it has become possible to collect large-scale datasets of human behaviour. However, manually annotating and labelling such data is not only tedious but also time consuming. Many existing tools and methods have been designed to annotate single or individual samples, yet it is often the case that images share similarities and often the same annotation label.

In this project, we will work on a novel method for semi-automatic labelling of eye contact data. We will use the existing EMVA dataset (Bâce et al. 2020) and propose methods to group images that, e.g., share the same head pose or gaze direction and, hence, share the same eye contact label. This project will involve exploring state-of-the-art methods for head pose estimation (Ruiz et al. 2018) and appearance-based gaze estimation (Zhang et al. 2015). Image source: www.emva-dataset.org

Supervisor: Mihai Bâce

Distribution: 30% Literature, 10% Data Preparation, 30% Implementation, 30% Analysis and Evaluation

Requirements: Familiarity and interest in machine learning

Literature: Bâce, Mihai, Sander Staal and Andreas Bulling. 2020. Quantification of users' visual attention during everyday mobile device interactions. Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems.

Ruiz, Nataniel, Eunji Chong and James M. Rehg. 2018. Fine-grained head pose estimation without keypoints. Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW).

Zhang, Xucong, Yusuke Sugano, Mario Fritz and Andreas Bulling. 2015. Appearance-based gaze estimation in the wild. Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) .

Multi-Step Visual Reasoning in Video Grounded Dialog

Description: Investigating the reasoning capabilities of neural networks is gaining more and more importance, especially at the intersection of Computer Vision (CV) and Natural Language Processing (NLP). Former works showed promising results on different tasks, e.g. Visual Question Answering (VQA) or Visual Dialog. In fact, these works managed to achieve near-perfect accuracy on multiple datasets such as CLEVR or CLEVR-Dialog.

In this project, we aim at tackling the more challenging task of Video Dialog by designing novel deep learning methods capable of dealing with the time-dependent textual and visual inputs. The major outcome of this project is achieving new state-of-the-art results on the newly-published DVD dataset.

Supervisor: Adnen Abdessaied

Distribution: 20% Literature, 60% Implementation, 20% Analysis

Requirements: Strong Knowledge in DL, CV, and NLP. Previous experience with PyTorch is a plus

Literature: Le, Hung, Chinnadhurai Sankar, Seungwhan Moon, Ahmad Beirami, Alborz Geramifard, Satwik Kottur. 2021. DVD: A diagnostic dataset for multi-step reasoning in video grounded dialogue. Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing.

Ding, David, Felix Hill, Adam Santoro, Malcolm Reynolds, Matt Botvinick. 2020. Object-based attention for spatio-temporal reasoning: Outperforming neuro-symbolic models with flexible distributed architectures. arxiv:2012.0850. Retrieved from https://arxiv.org/abs/2012.0850

Shah, Muhammad, Shikib Mehri and Tejas Srinivasan. 2020. Reasoning over history: context aware visual dialog. Proceedings of the First International Workshop on Natural Language Processing Beyond Text. Proceedings of the First International Workshop on Natural Language Processing Beyond Text.

Diagnostic Dataset for Video Dialog based on CLEVRER

Description: Neuro-Symbolic AI is becoming more and more relevant for tasks at the intersection of Computer Vision (CV) and Natural Language Processing (NLP). For some tasks (e.g. Visual Question Answering, Visual Dialog), appropriate datasets for training Neuro-Symbolic models exist. However, for other tasks like video grounded dialog, these datasets are still scarce.

In this project, we aim at generating a Video Dialog dataset based on the CLEVRER Videos. Our dataset can then be used to test the generalizability of methods previously trained on similar datasets, e.g. DVD.

Supervisor: Adnen Abdessaied

Distribution: 20% Literature, 40% Implementation, 40% Analysis

Requirements: Strong Knowledge in Python and interest in DL and ML.

Literature:Le, Hung, Chinnadhurai Sankar, Seungwhan Moon, Ahmad Beirami, Alborz Geramifard, Satwik Kottur. 2021. DVD: A diagnostic dataset for multi-step reasoning in video grounded dialogue. Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing.

Yi, Kexin, Chuang Gan, Yunzhu Li, Pushmeet Kohli, Jiajun Wu, Antonio Torralba, Joshua B. Tenenbaum. 2020. CLEVRER: Collision Events for Video Representation and Reasoning. International Conference on Learning Representations.

Bachelor thesis projects

Detecting Personality during Group Interactions from Multimodal Behaviors

Description: This project is to detect personality from multimodal behavioural data within small groups. You will use a dataset (as shown in the figure) that recorded videos when 3 or 4 participants had a group discussion (Müller et al. 2018). Participants also reported their personality by completing a Big Five personality questionnaire. Speech, body movement, posture, eye contact and facial expression can be extracted from the video to predict a participant’s personality via machine learning or deep learning.

Supervisor: Guanhua Zhang

Distribution: 20% Literature, 20% Data Preparation, 40% Implementation, 20% Data Analysis

Requirements: Strong programming skills, experience with machine learning/deep learning and data analysis

Literature: Philipp Müller, Michael Xuelin Huang, and Andreas Bulling. 2018. Detecting Low Rapport During Natural Interactions in Small Groups from Non-Verbal Behaviour. Proceedings of the 23rd International Conference on Intelligent User Interfaces (IUI).

Personality Recognition under Data Scarcity

Description: Personality is stable, which means no matter how much data we collect from a person, he or she always has only one corresponding score for a personality trait that will be the ground-truth label in recognition. For example, we may collect data for hours from a user, but all the samples map to only one value. This may lead to a data scarcity problem, i.e., training a traditional recognition model, especially a regression model, requires the dataset to cover a wide range of score values. In this case, hundreds or even thousands of participants who have different personality scores have to be recruited, increasing the difficulty of data collection and personality research. Therefore, this project will try to handle the problem via data science and machine learning techniques, e.g., data augmentation, embedding, representation learning, unsupervised learning, etc., to effectively predict personality from a small amount of data. Several personality datasets, like AMIGOS (Miranda-Correa et al. 2018) shown in the figure, can be used to test and evaluate your approaches.

Supervisor: Guanhua Zhang

Distribution: 20% Literature, 10% Data Preparation, 50% Implementation, 20% Data Analysis

Requirements: Strong programming skills, experience with machine learning and data analysis

Literature: Juan Abdon Miranda-Correa, Motjaba Khomami Abadi, Nicu Sebe, and Ioannis Patras. 2018. AMIGOS: A dataset for affect, personality and mood research on individuals and groups. IEEE Transactions on Affective Computing.

Interpreting Attention-based Visual Question Answering Models with Multimodal Human Visual Attention

Description: In visual question answering (VQA) the task for the network is to answer questions about a given image and a natural language (Agrawal et al. 2015). VQA is a machine comprehension task and as such allows researchers to test if models are able to learn reasoning capabilities between various modalities. It is a field of interest for researchers from various backgrounds as it intertwines fields of computer vision and natural language processing. Inspired by human visual attention, in recent years many models incorporate various neural attention algorithms. Attention mechanisms supply the network with the ability to focus on a particular instance/element of the input sequence. Subsequently, attention-based networks often yield better results. However, in recent years, performance is not just the main focus. In addition to high performing models, researchers (Das et al. 2016, Sood et al. 2021) are also interested in interpreting neural attention and bridging the gap between neural attention and human visual attention.

To that end, in this project the student will interpret the learned multimodal attention of off-the-shelf VQA models which have performed with SOTA results. The student will extend the work of Sood et al. 2021, by using the same approach for evaluating human versus machine multimodal attention, on additional SOTA attentive VQA models with a focus on the current high performing transformer-based networks.

Supervisor: Ekta Sood

Distribution: 20% Literature, 10% Data Collection, 30% Implementation, 40% Data Analysis and Evaluation.

Requirements: Interest in attention based neural networks, cognitive science, and multimodal representation learning, experience with data analysis/statistics, familiarity with machine learning and exposure to at least one the following frameworks — Tensorflow/PyTorch/Keras.

Literature: Aishwarya Agrawal, Stanislaw Antol, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, and Devi Parikh. 2015. VQA: Visual question answering. arxiv:1505.00468. Retrieved from https://arxiv.org/abs/1505.00468

Abhishek Das, Harsh Agrawal, C. Lawrence Zitnick, Devi Parikh, and Dhruv Batra. 2016. Human attention in visual question answering: Do humans and deep networks look at the same regions? arxiv:1606.03556. Retrieved from https://arxiv.org/abs/1606.03556

Ekta Sood, Fabian Kögel, Florian Strohm, Prajit Dhar, and Andreas Bulling. 2021. VQA-MHUG: A gaze dataset to study multimodal neural attention in VQA. Proceedings of the 2021 ACL SIGNLL Conference on Computational Natural Language Learning (CoNLL).

Predicting Neurological Deficits with Gaze

Description: Gaze patterns in individuals with Autism Spectrum Disorder (ASD) have been heavily researched as atypical eye gaze movements are part of the diagnosis signs. For example, Yaneva et al. (2015) built an ASD corpus extracting gaze data while individuals with ASD performed web-related tasks; the focus on this research was to enhance web accessibility for people with ASD and to work towards ASD detection leveraging gaze information. Regneri et al. (2016) leveraged gaze data for discourse analysis on individuals with and without ASD, their main objective being to evaluate text cohesion between varied groups. Leveraging information from gaze patterns has assisted researchers in further understanding the underlying aspects of language comprehension and production for individuals with neurological deficits such as ASD.

In this project, we aim to utilize machine learning approaches to build on such previous work from psychologists and neuroscientists. Due to the high variability and lack of resources in such a domain, the main objective is to continue building the path towards assistive interactive technologies for individuals with ASD. The task is to use gaze data to classify ASD; the research question is, can we classify individuals generally according to their gaze patterns. As eye tracking data can be sparse and labor intensive to retrieve, particularly for novel groups, we propose to extract the data using OpenFace (Amos et al., 2016). The data can be crawled from, for example, Youtube videos of people with ASD, specifically extracting varied group ages such that we can bin subtypes. The goal here would be to classify ASD with gaze information using a ML approach, and then to further conduct in-depth analysis on variations of gaze patterns specific to variables such as age.

Supervisor: Ekta Sood

Distribution: 20% Literature, 30% Data Collection, 30% Implementation, 20% Data Analysis and Evaluation.

Requirements: Interest in cognitive science (particularly with the human visual perception) and assistive technologies, familiarity with data processing and analysis/statistics, experience with machine learning. It would also be helpful to have exposure to the following frameworks — Tensorflow/PyTorch/Keras.

Literature: Brandon Amos, Bartosz Ludwiczuk, and Mahadev Satyanarayanan. 2016. Openface: A general-purpose face recognition library with mobile applications. CMU School of Computer Science, Technical Report CMU-CS-16-118.

Michaela Regneri and Diane King. 2016. Automated discourse analysis of narrations by adolescents with autistic spectrum disorder. Proceedings of the 7th Workshop on Cognitive Aspects of Computational Language Learning.

Victoria Yaneva, Irina Temnikova, and Ruslan Mitkov. 2015. Accessible texts for autism: An eye-tracking study. Proceedings of the 17th International ACM SIGACCESS Conference on Computers & Accessibility (ASSETS).