Understanding and Modelling Mobile Graphical User Interfaces

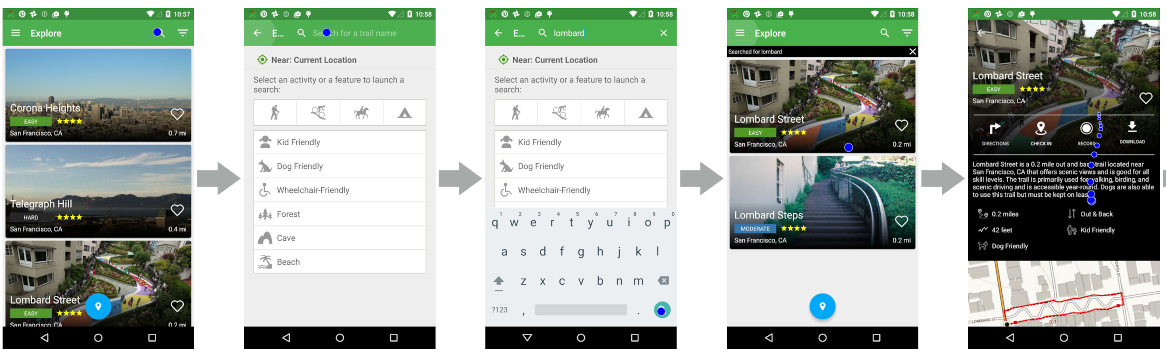

Description: Representation learning has been used to model graphical user interfaces (GUIs) to map screenshot images into denser and semantic embedding vectors. The distances between such embeddings can show the similarity between the original GUIs, which helps researchers and designers under GUIs. Touch behaviour, that leads to switching between different GUIs, should convey useful information for understanding the relationships between GUIs and predicting the potential GUI sequences. This project aims at adding touch behaviour into GUI modelling, compares with SOTA and gets insights of GUI and touch behaviour.

Supervisor: Guanhua Zhang

Distribution: 20% Literature, 10% Data preparation, 50% Deep model implementation, 20% Analysis and discussion

Requirements: Strong programming skills, practical experience of building deep networks on Pytorch/Tensorflow/Keras

Literature: Biplab Deka et al. 2017. Rico: A mobile app dataset for building data-driven design applications. Proceedings of the 30th Annual ACM Symposium on User Interface Software and Technology (UIST).

Bryan Wang et al. 2021. Screen2words: Automatic mobile UI summarization with multimodal learning. The 34th Annual ACM Symposium on User Interface Software and Technology (UIST).

Toby Jia-Jun Li et al. 2021. Screen2vec: Semantic embedding of GUI screens and GUI components. Proceedings of the 2021 Conference on Human Factors in Computing Systems (CHI).