MPIIGroupInteraction

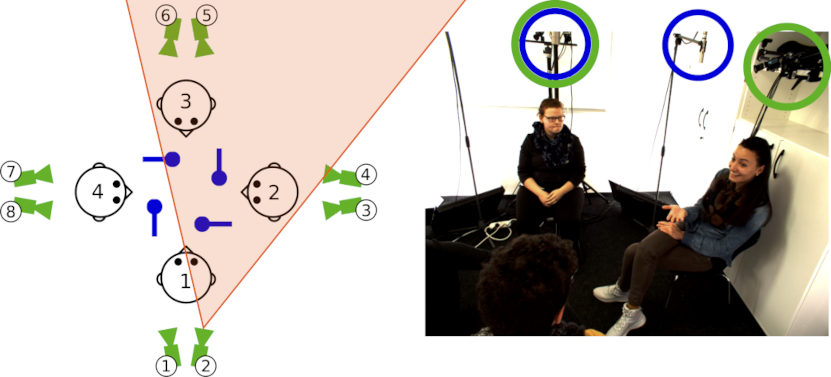

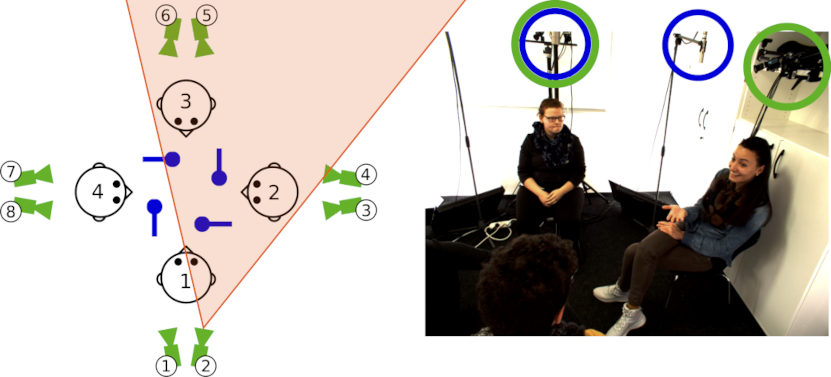

Recording setup:

The data recording took place in a quiet office in which a larger area was cleared of existing furniture. The office was not used by anybody else during the recordings. To capture rich visual information and allow for natural bodily expressions, we used a 4DV camera system to record frame-synchronised video from eight ambient cameras. Specifically, two cameras were placed behind each participant and with a position slightly higher than the head of the participant (see the green indicators in the figure). With this configuration a near-frontal view of the face of each participant could be captured throughout the experiment, even if participants turned their head while interacting with each other. In addition, we used four BehringerB5 microphones with omnidirectional capsules for recording audio. To record high-quality audio data and avoid occlusion of the faces, we placed the microphones in front of but slightly above participants (see the blue indicators in the figure above).

Recording Procedure:

We recruited 78 German-speaking participants (43 female, aged between 18 and 38 years) from a German university campus, resulting in 12 group interactions with four participants, and 10 interactions with three participants. During the group forming process, we ensured that participants in the same group did not know each other prior to the study. To prevent learning effects, every participant took part in only one interaction. Preceding each group interaction, we told the participants that first personal encounters could result in various artifacts that we were not interested in. As a result, we would first do a pilot discussion for them to get to know each other, followed by the actual recording. We intentionally misled the participant to believe that the recording system would be turned on only after the pilot discussion, so that they would behave naturally. In fact, however, the recording system was running from the beginning and there was no follow-up recording. To increase engagement, we prepared a list of potential discussion topics and asked each group to choose the topic that was most controversial among group members. Afterwards, the experimenter left the room and came back about 20 minutes later to end the discussion. Finally, participants were debriefed, in particular about the deceit, and gave free and informed consent to their data being used and published for research purposes.

Annotations:

* Speaking turns were annotated for all recordings. If several people are speaking at the same time, this is reflected in the annotations. Backchannels do not constitute a speaking turn.

* Eye contact was annotated by observers for all participants every 15 seconds. In detail, annotators indicated whether a participant is looking at another participants’ face and, if so, who the participant looks at.

Download: Please download the EULA here and send to the address below. We will then give you the link to access the dataset. You can obtain the Readme file here.

Contact: Dominike Thomas,

The data is only to be used for non-commercial scientific purposes. If you use this dataset in a scientific publication, please cite the following papers:

-

MultiMediate: Multi-modal Group Behaviour Analysis for Artificial Mediation

Philipp Müller,

Dominik Schiller,

Dominike Thomas,

Guanhua Zhang,

Michael Dietz,

Patrick Gebhard,

Elisabeth André,

Andreas Bulling

Proc. ACM Multimedia (MM),

pp. 4878–4882,

2021.

Abstract

Links

BibTeX

Project

Artificial mediators are promising to support human group conversations but at present their abilities are limited by insufficient progress in group behaviour analysis. The MultiMediate challenge addresses, for the first time, two fundamental group behaviour analysis tasks in well-defined conditions: eye contact detection and next speaker prediction. For training and evaluation, MultiMediate makes use of the MPIIGroupInteraction dataset consisting of 22 three- to four-person discussions as well as of an unpublished test set of six additional discussions. This paper describes the MultiMediate challenge and presents the challenge dataset including novel fine-grained speaking annotations that were collected for the purpose of MultiMediate. Furthermore, we present baseline approaches and ablation studies for both challenge tasks.

@inproceedings{mueller21_mm,

title = {MultiMediate: Multi-modal Group Behaviour Analysis for Artificial Mediation},

author = {M{\"{u}}ller, Philipp and Schiller, Dominik and Thomas, Dominike and Zhang, Guanhua and Dietz, Michael and Gebhard, Patrick and André, Elisabeth and Bulling, Andreas},

year = {2021},

pages = {4878--4882},

doi = {10.1145/3474085.3479219},

booktitle = {Proc. ACM Multimedia (MM)}

}

-

Detecting Low Rapport During Natural Interactions in Small Groups from Non-Verbal Behavior

Philipp Müller,

Michael Xuelin Huang,

Andreas Bulling

Proc. ACM International Conference on Intelligent User Interfaces (IUI),

pp. 153-164,

2018.

Abstract

Links

BibTeX

Project

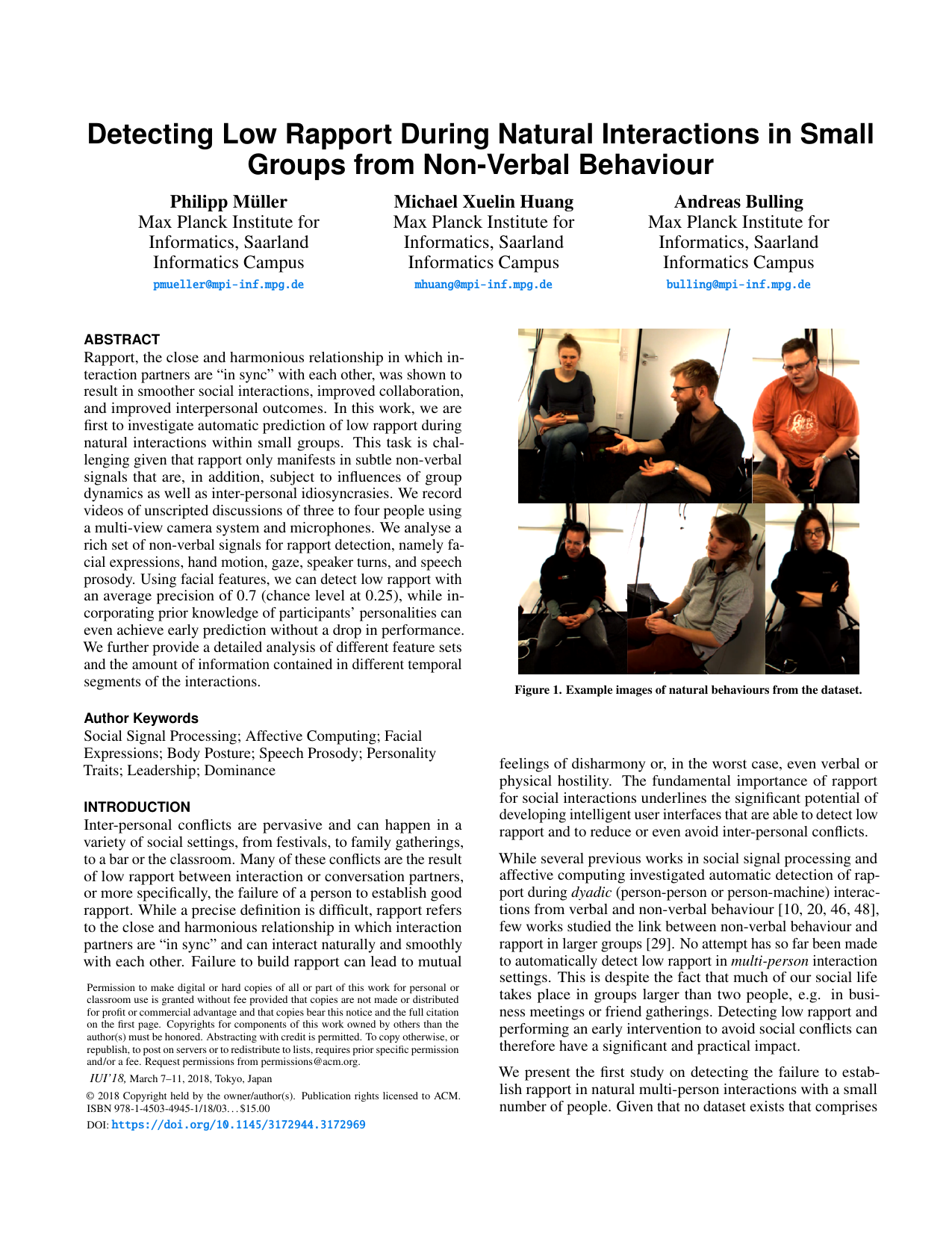

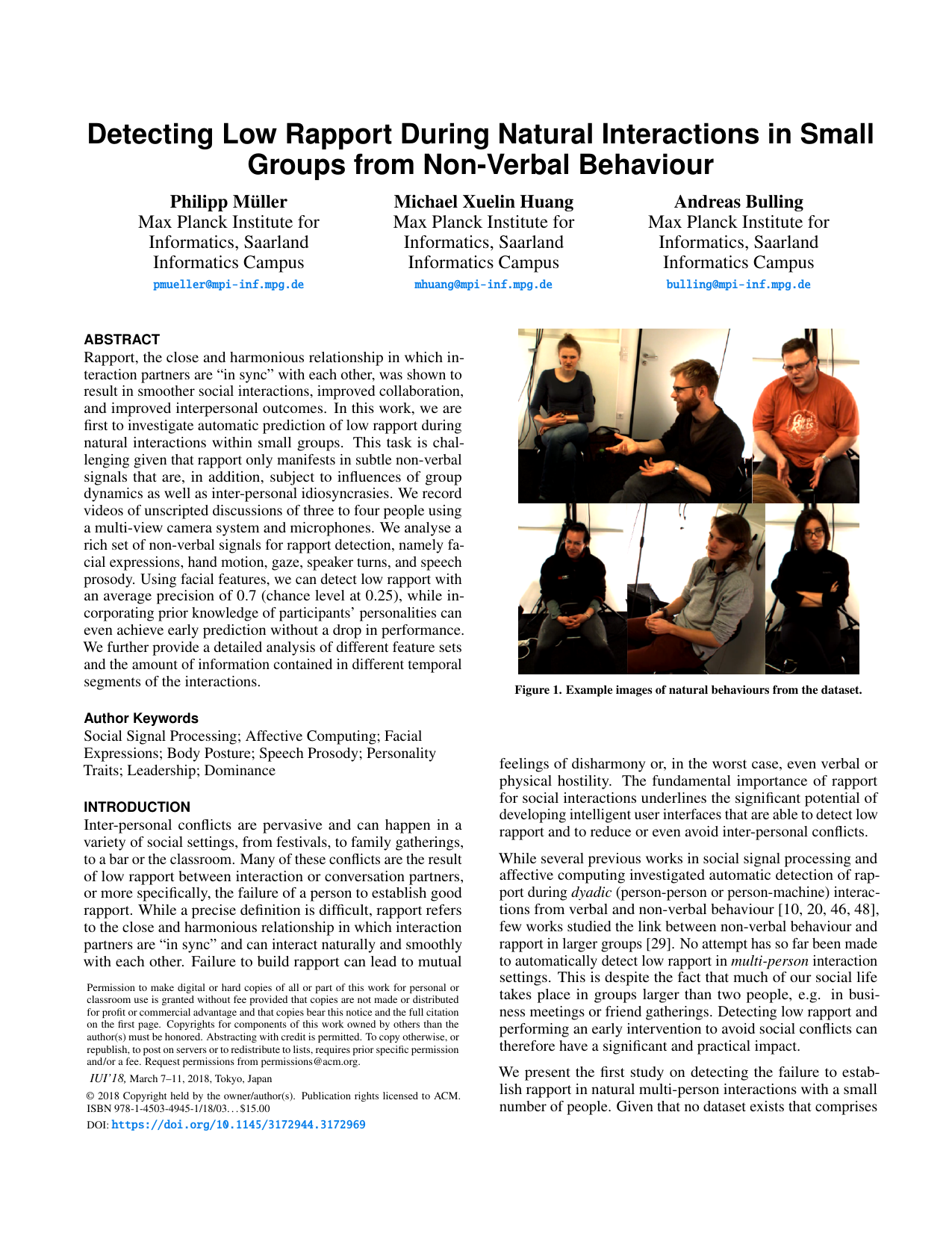

Rapport, the close and harmonious relationship in which interaction partners are "in sync" with each other, was shown to result in smoother social interactions, improved collaboration, and improved interpersonal outcomes. In this work, we are first to investigate automatic prediction of low rapport during natural interactions within small groups. This task is challenging given that rapport only manifests in subtle non-verbal signals that are, in addition, subject to influences of group dynamics as well as inter-personal idiosyncrasies. We record videos of unscripted discussions of three to four people using a multi-view camera system and microphones. We analyse a rich set of non-verbal signals for rapport detection, namely facial expressions, hand motion, gaze, speaker turns, and speech prosody. Using facial features, we can detect low rapport with an average precision of 0.7 (chance level at 0.25), while incorporating prior knowledge of participants’ personalities can even achieve early prediction without a drop in performance. We further provide a detailed analysis of different feature sets and the amount of information contained in different temporal segments of the interactions.

@inproceedings{mueller18_iui,

title = {Detecting Low Rapport During Natural Interactions in Small Groups from Non-Verbal Behavior},

author = {M{\"{u}}ller, Philipp and Huang, Michael Xuelin and Bulling, Andreas},

year = {2018},

pages = {153-164},

booktitle = {Proc. ACM International Conference on Intelligent User Interfaces (IUI)},

doi = {10.1145/3172944.3172969}

}

-

Robust Eye Contact Detection in Natural Multi-Person Interactions Using Gaze and Speaking Behaviour

Philipp Müller,

Michael Xuelin Huang,

Xucong Zhang,

Andreas Bulling

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA),

pp. 1–10,

2018.

Abstract

Links

BibTeX

Project

Eye contact is one of the most important non-verbal social cues and fundamental to human interactions. However, detecting eye contact without specialized eye tracking equipment poses significant challenges, particularly for multiple people in real-world settings. We present a novel method to robustly detect eye contact in natural three- and four-person interactions using off-the-shelf ambient cameras. Our method exploits that, during conversations, people tend to look at the person who is currently speaking. Harnessing the correlation between people’s gaze and speaking behaviour therefore allows our method to automatically acquire training data during deployment and adaptively train eye contact detectors for each target user. We empirically evaluate the performance of our method on a recent dataset of natural group interactions and demonstrate that it achieves a relative improvement over the state-of-the-art method of more than 60%, and also improves over a head pose based baseline.

@inproceedings{mueller18_etra,

author = {M{\"{u}}ller, Philipp and Huang, Michael Xuelin and Zhang, Xucong and Bulling, Andreas},

title = {Robust Eye Contact Detection in Natural Multi-Person Interactions Using Gaze and Speaking Behaviour},

booktitle = {Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)},

year = {2018},

pages = {1--10},

doi = {10.1145/3204493.3204549}

}