Evaluation of Appearance-Based Methods and Implications for Gaze-Based Applications

Xucong Zhang, Yusuke Sugano, Andreas Bulling

Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI), pp. 1–13, 2019.

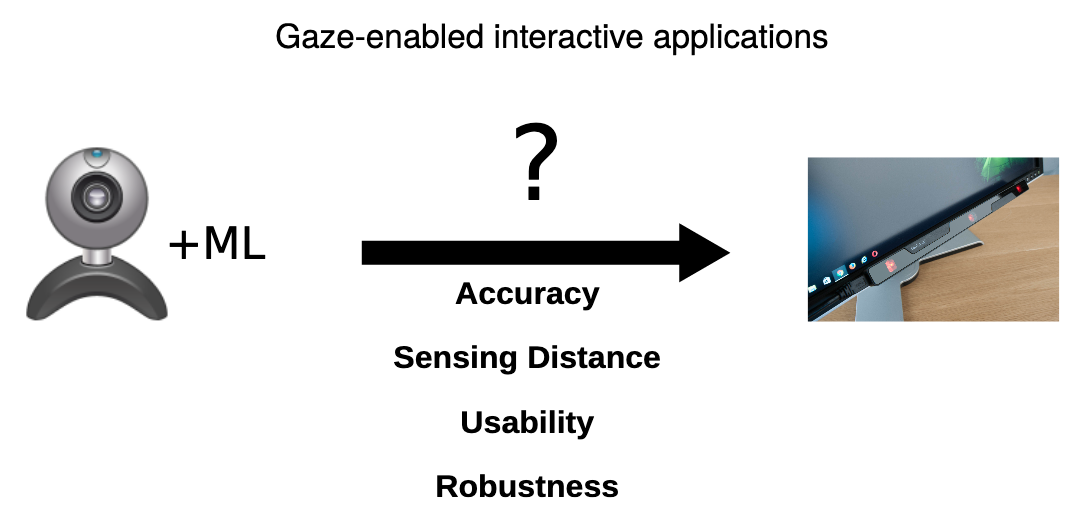

We study the gap between dominant eye tracking using special-purpose equipment (right) and appearance-based gaze estimation using off-the-shelf cameras and machine learning (left) in terms of accuracy (gaze estimation accuracy), sensing distance, usability (personal calibration), and robustness (glasses and indoor/outdoor use).

Abstract

Appearance-based gaze estimation methods that only require an off-the-shelf camera have significantly improved but they are still not yet widely used in the human-computer interaction (HCI) community. This is partly because it remains unclear how they perform compared to model-based approaches as well as dominant, special-purpose eye tracking equipment. To address this limitation, we evaluate the performance of state-of-the-art appearance-based gaze estimation for interaction scenarios with and without personal calibration, indoors and outdoors, for different sensing distances, as well as for users with and without glasses. We discuss the obtained findings and their implications for the most important gaze-based applications, namely explicit eye input, attentive user interfaces, gaze-based user modelling, and passive eye monitoring. To democratise the use of appearance-based gaze estimation and interaction in HCI, we finally present OpenGaze (www.opengaze.org), the first software toolkit for appearance-based gaze estimation and interaction.Links

BibTeX

@inproceedings{zhang19_chi,

author = {Zhang, Xucong and Sugano, Yusuke and Bulling, Andreas},

title = {Evaluation of Appearance-Based Methods and Implications for Gaze-Based Applications},

booktitle = {Proc. ACM SIGCHI Conference on Human Factors in Computing Systems (CHI)},

year = {2019},

doi = {10.1145/3290605.3300646},

pages = {1--13}

}