Improving Natural Language Processing Tasks with Human Gaze-Guided Neural Attention

Ekta Sood, Simon Tannert, Philipp Müller, Andreas Bulling

arxiv:2010.07891, pp. 1–18, 2020.

Abstract

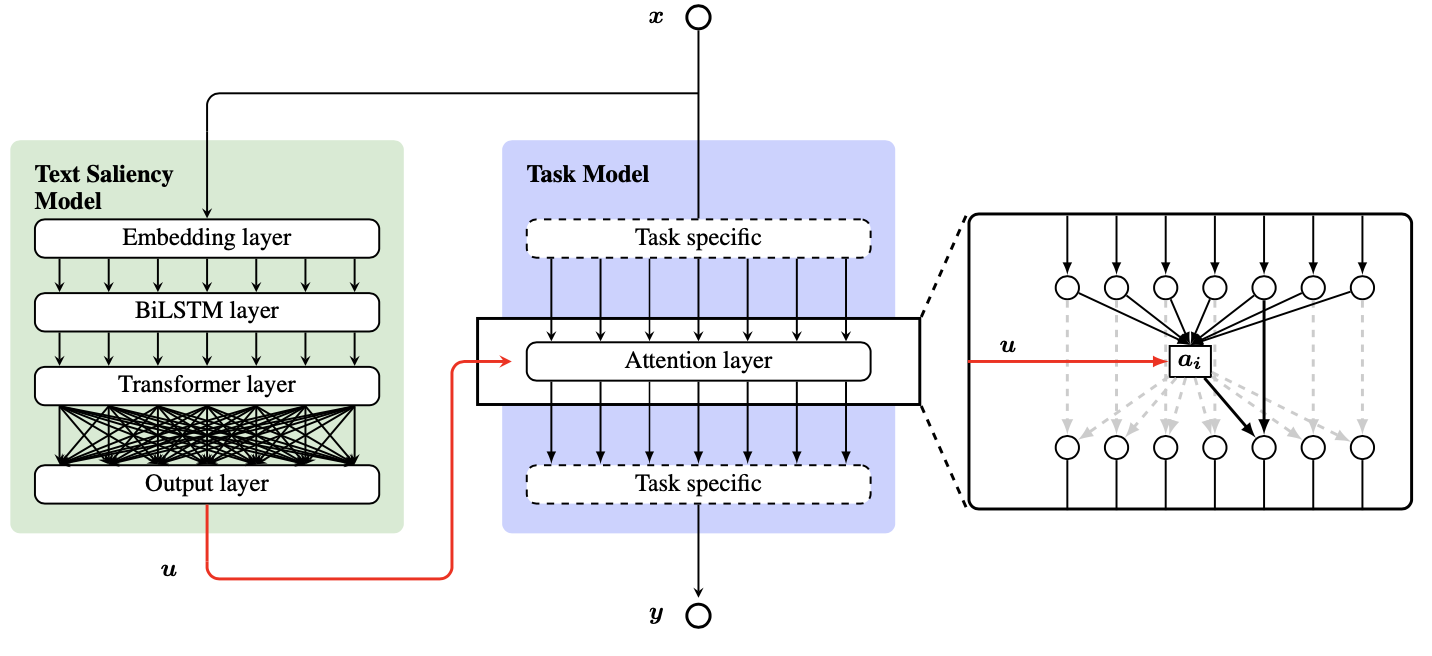

A lack of corpora has so far limited advances in integrating human gaze data as a supervisory signal in neural attention mechanisms for natural language processing (NLP). We propose a novel hybrid text saliency model (TSM) that, for the first time, combines a cognitive model of reading with explicit human gaze supervision in a single machine learning framework. We show on four different corpora that our hybrid TSM duration predictions are highly correlated with human gaze ground truth. We further propose a novel joint modelling approach to integrate the predictions of the TSM into the attention layer of a network designed for a specific upstream task without the need for task-specific human gaze data. We demonstrate that our joint model outperforms the state of the art in paraphrase generation on the Quora Question Pairs corpus by more than 10% in BLEU-4 and achieves state-of-the-art performance for sentence compression on the challenging Google Sentence Compression corpus. As such, our work introduces a practical approach for bridging between data-driven and cognitive models and demonstrates a new way to integrate human gaze-guided neural attention into NLP tasks.Links

Paper: sood20_arxiv.pdf

Paper Access: https://arxiv.org/abs/2010.07891

BibTeX

@techreport{sood20_arxiv,

author = {Sood, Ekta and Tannert, Simon and Müller, Philipp and Bulling, Andreas},

title = {Improving Natural Language Processing Tasks with Human Gaze-Guided Neural Attention},

year = {2020},

url = {https://arxiv.org/abs/2010.07891},

pages = {1--18}

}