Anticipating Averted Gaze in Dyadic Interactions

Philipp Müller, Ekta Sood, Andreas Bulling

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1-10, 2020.

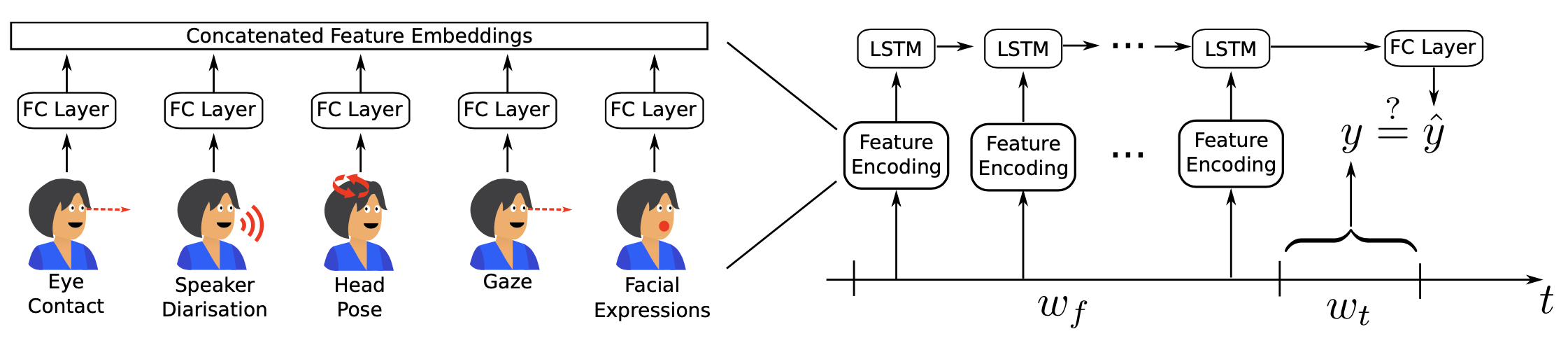

Overview of our eye contact anticipation method. Left: In the feature encoding network, each feature modality is fed through a fully connected layer (FC Layer) separately and the resulting representations are concatenated. Right: features are extracted on a feature window wf and fed through an embedding network consisting of a fully connected layer for each timestep separately, before they are fed to a LSTM network. At the last timestep of the feature window the LSTM outputs a classification score which is compared to ground truth extracted from the target window wt .

Abstract

We present the first method to anticipate averted gaze in natural dyadic interactions. The task of anticipating averted gaze, i.e. that a person will not make eye contact in the near future, remains unsolved despite its importance for human social encounters as well as a number of applications, including human-robot interaction or conversational agents. Our multimodal method is based on a long short-term memory (LSTM) network that analyses non-verbal facial cues and speaking behaviour. We empirically evaluate our method for different future time horizons on a novel dataset of 121 YouTube videos of dyadic video conferences (74 hours in total). We investigate person-specific and person-independent performance and demonstrate that our method clearly outperforms baselines in both settings. As such, our work sheds light on the tight interplay between eye contact and other non-verbal signals and underlines the potential of computational modelling and anticipation of averted gaze for interactive applications.Links

Paper: mueller20_etra.pdf

BibTeX

@inproceedings{mueller20_etra,

title = {Anticipating Averted Gaze in Dyadic Interactions},

author = {Müller, Philipp and Sood, Ekta and Bulling, Andreas},

year = {2020},

booktitle = {Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)},

doi = {10.1145/3379155.3391332},

pages = {1-10}

}