Error-Aware Gaze-Based Interfaces for Robust Mobile Gaze Interaction

Michael Barz, Florian Daiber, Daniel Sonntag, Andreas Bulling

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), pp. 1–10, 2018.

Best paper award

Abstract

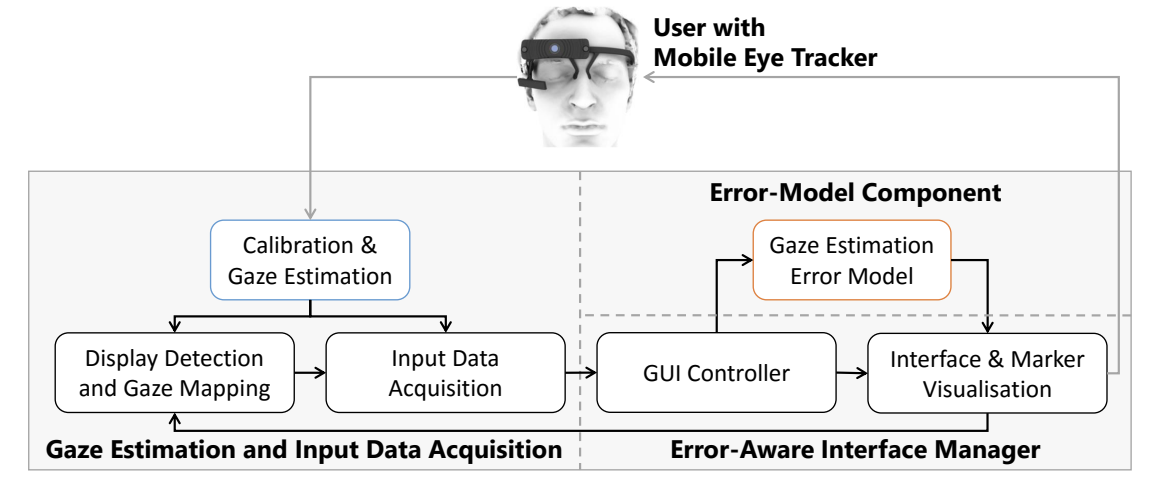

Gaze estimation error is unavoidable in head-mounted eye trackers and can severely hamper usability and performance of mobile gaze-based interfaces given that the error varies constantly for different interaction positions. In this work, we explore error-aware gaze-based interfaces that estimate and adapt to gaze estimation error on-the-fly. We implement a sample error-aware user interface for gaze-based selection and different error compensation methods: a naïve approach that increases component size directly proportional to the absolute error, a recent model by Feit et al. (CHI’17) that is based on the 2-dimensional error distribution, and a novel predictive model that shifts gaze by a directional error estimate. We evaluate these models in a 12-participant user study and show that our predictive model outperforms the others significantly in terms of selection rate, particularly for small gaze targets. These results underline both the feasibility and potential of next generation error-aware gaze-based user interfaces.Links

Paper: barz18_etra.pdf

BibTeX

@inproceedings{barz18_etra,

author = {Barz, Michael and Daiber, Florian and Sonntag, Daniel and Bulling, Andreas},

title = {Error-Aware Gaze-Based Interfaces for Robust Mobile Gaze Interaction},

booktitle = {Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA)},

year = {2018},

pages = {1--10},

doi = {10.1145/3204493.3204536}

}