Mindful Explanations: Prevalence and Impact of Mind Attribution in XAI Research

Susanne Hindennach,

Lei Shi,

Filip Miletic,

Andreas Bulling

Proc. ACM on Human-Computer Interaction (PACM HCI), 8(CSCW),

pp. 1–42,

2024.

Abstract

Links

BibTeX

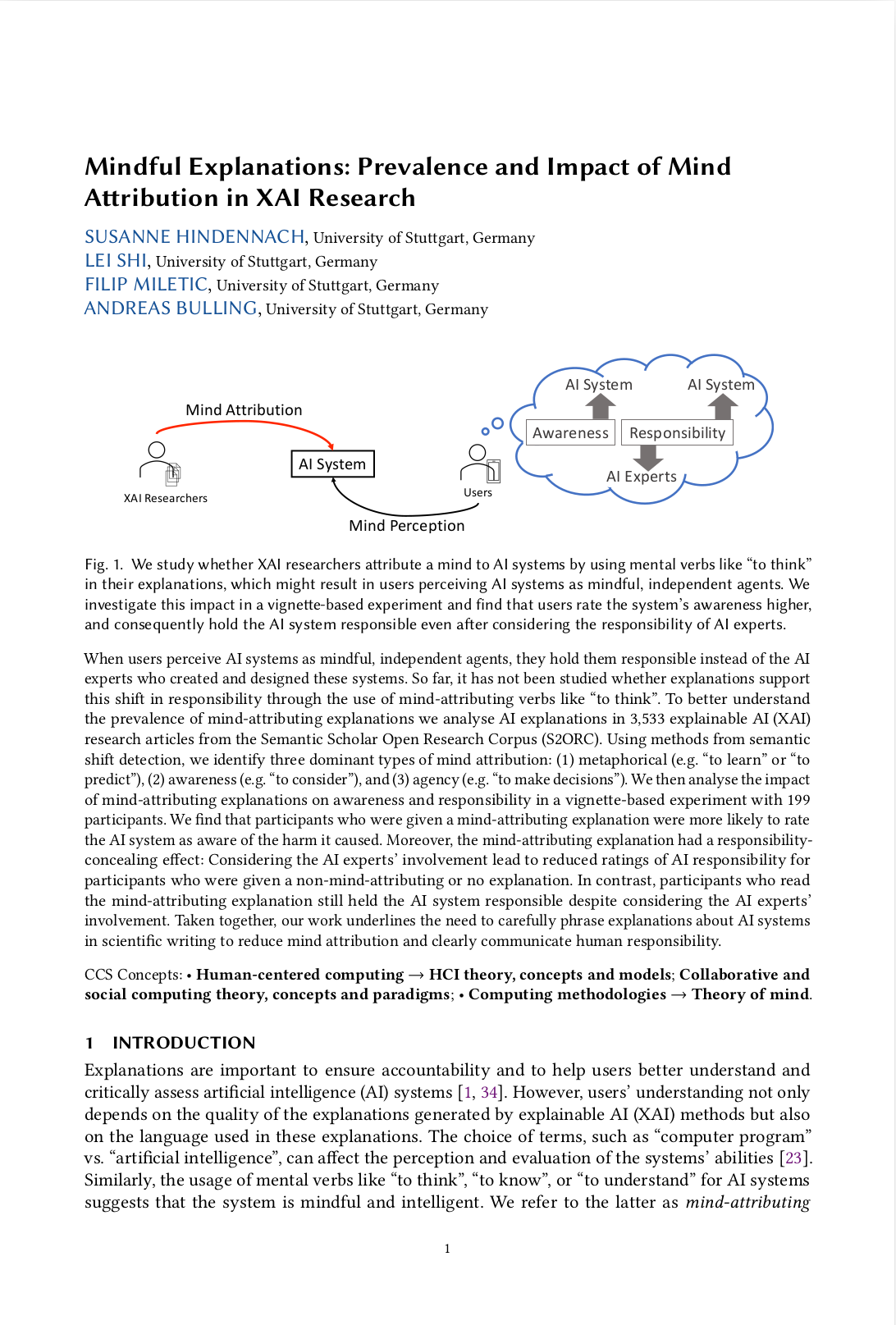

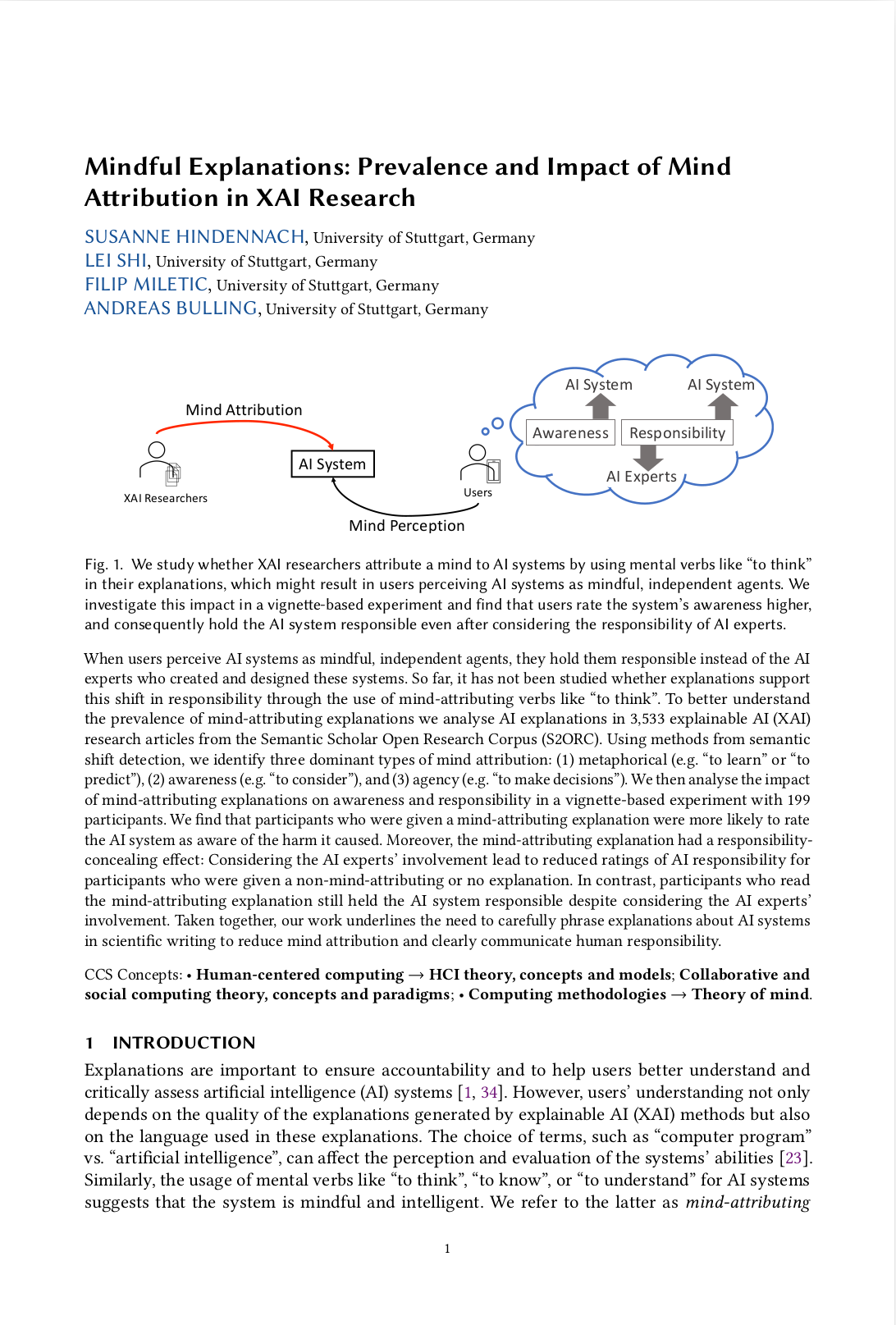

Project

When users perceive AI systems as mindful, independent agents, they hold them responsible instead of the AI experts who created and designed these systems. So far, it has not been studied whether explanations support this shift in responsibility through the use of mind-attributing verbs like "to think". To better understand the prevalence of mind-attributing explanations we analyse AI explanations in 3,533 explainable AI (XAI) research articles from the Semantic Scholar Open Research Corpus (S2ORC). Using methods from semantic shift detection, we identify three dominant types of mind attribution: (1) metaphorical (e.g. "to learn" or "to predict"), (2) awareness (e.g. "to consider"), and (3) agency (e.g. "to make decisions"). We then analyse the impact of mind-attributing explanations on awareness and responsibility in a vignette-based experiment with 199 participants. We find that participants who were given a mind-attributing explanation were more likely to rate the AI system as aware of the harm it caused. Moreover, the mind-attributing explanation had a responsibility-concealing effect: Considering the AI experts’ involvement lead to reduced ratings of AI responsibility for participants who were given a non-mind-attributing or no explanation. In contrast, participants who read the mind-attributing explanation still held the AI system responsible despite considering the AI experts’ involvement. Taken together, our work underlines the need to carefully phrase explanations about AI systems in scientific writing to reduce mind attribution and clearly communicate human responsibility.

@article{hindennach24_pacm,

title = {Mindful Explanations: Prevalence and Impact of Mind Attribution in XAI Research},

author = {Hindennach, Susanne and Shi, Lei and Miletic, Filip and Bulling, Andreas},

year = {2024},

pages = {1--42},

volume = {8},

number = {CSCW},

doi = {10.1145/3641009},

journal = {Proc. ACM on Human-Computer Interaction (PACM HCI)}

}